In today’s data-driven world, artificial intelligence (AI) is transforming industries by providing unprecedented capabilities in automation, prediction, and decision-making. However, as we integrate AI systems more deeply into our lives and businesses, the need for explainability, interpretability, and ethical responsibility becomes critical. This blog post explores how leveraging graph technology can enhance these aspects, creating a more reliable and ethical AI landscape.

Unique Partnership and Innovation

At the heart of this transformation is our unique partnership between TigerGraph and EBCONT. TigerGraph, with its best-of-breed graph database technology, excels in discovering deep insights from complex, connected data. TigerGraph’s platform stands out for its ability to handle massive datasets with exceptional speed and scalability, enabling real-time analytics and advanced machine learning applications. TigerGraph’s technology supports intricate data relationships, providing unparalleled performance for sophisticated analytics tasks.

At EBCONT, a global IT solutions provider, we integrate TigerGraph’s cutting-edge technology with our customers’ business vision and leverage modern data management practices. Our innovation team manages end-to-end solution delivery, encompassing a wide range of expertise, including custom business application development, UX/UI design, data integration, and advanced analytics. Together, with TigerGraph’s unparalleled graph technology and our expertise in creating comprehensive solutions, we are positioned to drive transformative changes across industries.

The Revolutionary Impact of Generative AI

Generative AI, particularly through the use of Large Language Models (LLMs), represents a significant leap forward in AI development. These models are capable of generating human-like text, making them valuable for a wide range of applications from chatbots to content creation. LLMs learn from vast amounts of data, allowing them to perform complex language tasks and provide sophisticated responses.

While generative AI offers immense potential, it also brings challenges, with privacy being a paramount concern. Instead of exposing sensitive data to public websites or external sources, companies must ensure their data remains within their own secure environments. When LLMs are trained on public environments, there is a risk that data could be used to inadvertently learn and memorize confidential information, potentially leading to privacy breaches. Ensuring data privacy and compliance with regulations becomes a complex issue, necessitating advanced solutions to safeguard sensitive information.

The Strengths and Limitations of Vector RAG Systems

Retrieval-Augmented Generation (RAG) systems have emerged as a robust and widely embraced solution for tackling the privacy concerns inherent in LLMs. These systems combine the power of LLMs with vector search capabilities, enhancing their ability to retrieve and generate relevant information quickly and securely. RAG systems can transform ingested images, documents, and audio into embeddings, which are then represented with dense vectors. These vectors can be efficiently compared with user queries through similarity measures, ensuring that private data is not directly exposed or memorized by the model. RAG systems excel in indexing and searching large datasets, making them powerful tools for information retrieval.

However, vector-only RAG systems face significant limitations. They often struggle with bias, lack of context understanding, and the potential for inaccuracies in their responses, commonly referred to as “hallucinations”, issues that were already inherent in LLMs. Bias arises because these models can perpetuate the biases present in their training data, leading to skewed outputs that reflect existing patterns rather than objective and deterministic calculations. Furthermore, vector-only RAG systems have difficulty grasping nuanced meanings beyond textual information alone, lacking the deeper contextual understanding required for more complex reasoning.

These challenges stem from the complexities of natural language processing and the limitations of current algorithms, which may not always represent the full context and underlying relationships accurately. While vector-only RAG systems mitigate privacy concerns, we still require advancements in contextual understanding and bias reduction to fully realize the Generative AI potential.

The Unique Strengths of Graph Technology

Graphs offer a compelling solution to the challenges faced by LLM-based and vector-only RAG systems. Unlike traditional data models, graphs excel at representing and consolidating heterogeneous and interconnected knowledge from diverse business domains.

A key strength of graphs is their ability to represent relationships between nodes – documents, concepts, entities – offering a holistic view of interconnected data points. This relationship structure allows for a more comprehensive understanding of the context and dependencies within the data, which is crucial for contextual reasoning and generating deterministic relevant responses.

Furthermore, leveraging graphs can significantly reduce bias, as they incorporate a broader context and a more nuanced understanding of the relationships between data points. This helps in providing more balanced and unbiased outputs compared to traditional vector-only approaches. Additionally, graphs enhance content relevance by ensuring that the information retrieved and used is contextually accurate and pertinent to the query. They also maintain privacy more effectively by structuring data in a way that minimizes the exposure of sensitive information.

By addressing the limitations of vector-only RAG systems, graphs offer a powerful framework for developing more transparent, interpretable, and responsible AI systems, ultimately leading to better decision-making and strategic planning.

A Graph as the Digital Business Intelligence Hub

A Graph serves as a perpetual hub of a business’s expertise digitized, seamlessly integrating domain-human knowledge with enterprise processes data. By representing these two pillars of organizational intelligence in a structured and interconnected manner, graphs become invaluable assets that can be leveraged across various business processes. This comprehensive digital blueprint of an organization allows businesses to:

- Preserve Expertise: Safeguard the specialized knowledge of experts, ensuring that critical information is not lost due to employee turnover or organizational changes.

- Enhance Decision-Making: Provide decision-makers with a holistic view of interconnected data points, enabling more informed and strategic choices.

- Optimize Processes: Streamline and improve business processes by integrating contextual knowledge into everyday operations, from customer service to product development.

- Support Innovation: Foster innovation by making it easier to identify patterns, correlations, and opportunities within the vast amounts of data that businesses generate.

By leveraging Graphs, businesses can ensure that their accumulated expertise and integrated data assets continue to drive value and innovation. This digital transformation not only enhances the efficiency and effectiveness of business operations but also ensures that the organization’s knowledge remains an enduring and dynamic asset.

Solving Real-World Problems with Graphs

Graphs are already making a tangible impact across various industries. By leveraging the unique strengths of graphs, organizations can address specific challenges with precision and reliability. Here are some key verticals where this technology is transforming operations:

Deepening Personalization

For customer-centric businesses, graphs enhance personalization and customer engagement by providing deeper insights into customer behavior and preferences. Applications in this vertical include:

- Product and Service Recommendations: Analyzing purchase patterns and user behavior to deliver highly personalized product and service recommendations, improving customer satisfaction and sales.

- Customer 360: Building comprehensive, 360-degree profiles of customers by integrating data from various touchpoints, enabling more personalized and effective customer interactions.

- Customer Insights: Gaining deeper insights into customer preferences and behaviors, allowing businesses to tailor their strategies and offerings to meet the unique needs of their customers.

Uncovering Hidden Networks

Graphs can uncover hidden networks and relationships that are crucial for detecting and preventing fraudulent activities. The applications include:

- Fraud Detection: By analyzing complex transaction networks, graphs can identify unusual patterns and suspicious activities, enhancing the accuracy of fraud detection systems.

- Entity Resolution: Graphs can consolidate data from various sources to resolve ambiguities and correctly identify entities, improving data quality and reliability.

- Know Your Customer (KYC): Enhancing KYC processes by integrating diverse data points to build comprehensive profiles of customers, ensuring compliance and reducing risk.

- Anti-Money Laundering (AML): Identifying and analyzing intricate financial networks to detect and prevent money laundering activities, ensuring regulatory compliance and safeguarding financial institutions.

Making Faster Decisions

Graphs enable more agile and informed decision-making by providing a comprehensive view of interdependencies and relationships. Key applications include:

- Supply Chain Management: Enhancing visibility across the supply chain by mapping out complex relationships between suppliers, manufacturers, and distributors, leading to more efficient and responsive supply chain operations.

- Inventory Management: Optimizing inventory levels and reducing waste by understanding the relationships and dependencies between different inventory items and their usage patterns.

- Digital Twins: Creating digital replicas of physical assets with detailed interdependencies, enabling better monitoring, simulation, and optimization of operations.

Augmented Intelligence: Combining Graphs with Generative AI

The integration of Graphs with Generative AI creates a powerful synergy, hereby defined as augmented intelligence. This approach enhances GenAI systems by providing contextualized business reasoning, leading to interpretable, explainable, and ethically responsible AI outcomes. Moreover, it democratizes access to data-driven insights, ensuring that the users can comprehend and leverage them effectively.

Graphs improve contextual understanding by capturing relationships between entities, which allows the AI systems to retrieve contextually relevant information from diverse knowledge sources. This enriched context enhances the relevance and accuracy of AI responses, making the systems more effective in handling complex queries and providing valuable insights and enabling users to ask direct queries and receive complete answers based on the enterprise data landscape. Drawing on verified and specialized knowledge sources, AI delivers more accurate and relevant information crucial for high-stakes decision-making processes where precision and reliability are paramount.

By combining the strengths of graphs with the generative power of LLMs, organizations can develop AI systems that not only perform exceptionally well but also adhere to high standards of transparency and ethical responsibility. This synergy supports better decision-making, strategic planning, and operational efficiency, ultimately leading to more informed and reliable outcomes.

Practical Enterprise-Ready Implementation

Implementing the advanced approach of combining graphs with generative AI involves a well-defined data governance pipeline. This process ensures that data is accurately integrated, contextually enriched, and ethically managed. The key components of this pipeline include:

- Data Integration from Domain Experts:

- Collection: Gather data from various sources, including databases, documents, and expert knowledge.

- Normalization: Standardize data formats to ensure consistency and compatibility.

- Validation: Verify the accuracy and reliability of the data through domain-experts review and automated checks.

- Annotation: Enrich the data with metadata and annotations that capture domain-specific knowledge and nuances.

- Graph Creation:

- Modeling: Design the schema of the Graph to represent entities, relationships, and attributes relevant to the domain.

- Population: Ingest data into the Graph, linking entities and establishing relationships based on the schema.

- Optimization: Optimize the Graph for performance, ensuring efficient querying and retrieval of information.

- Maintenance: Regularly update and refine the Graph to incorporate new data and improve accuracy.

- Vector Search Integration:

- Embedding Generation: Convert text, images, documents, and audio into high-dimensional vector embeddings using machine learning models.

- Indexing: Organize these embeddings in a vector database to enable fast and efficient similarity searches.

- Query Processing: Implement algorithms to compare user queries with the indexed embeddings, retrieving the most relevant information.

- Relevance Tuning: Continuously refine the search algorithms to improve the relevance and accuracy of search results.

- Application of Language Models:

- Model Selection: Choose appropriate LLMs that can interact with the Graph and vector search systems.

- Fine-Tuning: Customize the LLMs to align with the specific requirements and contexts of the enterprise.

- Integration: Seamlessly integrate the LLMs with the Graph and vector search to enable enriched and context-aware responses.

- Monitoring: Continuously monitor the performance of the models, ensuring they deliver accurate and reliable outputs.

- User Experience:

- Interface Design: Develop user-friendly interfaces that allow users to interact with the system easily and intuitively.

- Personalization: Implement features that personalize the user experience based on individual preferences and behavior.

- Feedback Mechanisms: Incorporate feedback loops that allow users to provide input on the system’s performance, which can be used to make ongoing improvements.

- Training and Support: Provide training and support to users to ensure they can effectively utilize the system and understand its capabilities.

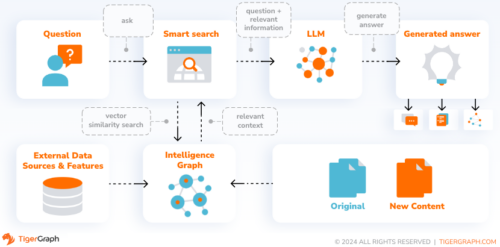

This image illustrates how these elements come together, providing a clear pathway from data ingestion to actionable insights. By structuring data governance in this manner, organizations can effectively manage their knowledge assets, ensuring accuracy, relevance, and ethical responsibility. This holistic approach not only enhances data quality and retrieval but also supports the creation of more transparent, interpretable, and responsible AI systems.

Conclusion

The integration of graph technology with generative AI represents a transformative step in AI development. By addressing critical challenges in privacy, bias, and contextual understanding, this approach enhances the transparency and responsibility of AI systems. As we advance, the continued focus on these principles will ensure that AI remains a force for positive and ethical progress in our data-driven world. The graph will be at the heart of this transformation, providing a stable foundation for leveraging human expertise in every aspect of business operations. This synergy of advanced AI and structured knowledge will pave the way for more reliable, responsible, and innovative solutions in the future.